EU AI Act Implementation Project Plan: Key Compliance Steps and Risk Classifications

Artificial Intelligence is reshaping industries, business models, and societies at unprecedented speed.

As AI systems increasingly influence decision-making, access to services, critical infrastructures, and fundamental rights, the European Union has taken a leading role in regulating their development and use. The EU Artificial Intelligence Act (“AI Act”) is the first comprehensive regulatory framework for AI worldwide. Its goal is to promote trustworthy, safe, and human-centric AI while fostering innovation and ensuring a functioning internal market.

This article outlines the key elements of the AI Act and:

- Explains how AI systems are classified

- Provides a practical roadmap for compliance

- Clarifies timelines and role-specific obligations.

What is the AI-Act and who does it apply to?

The AI Act is an EU Regulation establishing harmonised rules for the development, placing on the market, and use of AI systems in the European Union.

Its objectives are to:

- Ensure that AI systems placed on the EU market are safe and respect fundamental rights

,

- Enhance governance, oversight, and accountability of AI

- Support innovation through proportionate and risk-based rules

- Promote trust, uptake, and competitiveness of AI in Europe.

The Regulation also establishes the EU AI Office, a specialised unit within the European Commission responsible for supervising General Purpose AI (GPAI) models, coordinating national competent authorities, supporting enforcement, issuing guidance, and monitoring systemic risks across the EU. The AI Office plays a central role in overseeing obligations for GPAI providers, especially those related to transparency, risk mitigation, and systemic-risk assessments.

The AI Act applies broadly and captures all actors involved in the AI value chain, including non-EU organisations whose systems or outputs affect people in the EU.

The AI Act puts obligations on various players:

- Providers; placing AI on the EU market and putting AI into services in the EU

- Deployers; of AI based in the EU

- Providers/deployers in third countries, whose AI results are used in/for the EU

- Importers and distributors

- Product manufacturers

- Authorised representatives of providers

Important to note is that a registered office in a third country does not release the parties from their obligations if the AI is intended for usage in the EU.

How AI systems are classified: unacceptable, high, limited, and minimal risk

The AI Act follows a risk-based regulatory framework, meaning that obligations increase with the potential impact AI systems may have on individuals, society, and fundamental rights. AI systems are categorised into four main risk levels: unacceptable, high, limited, and minimal risk. Each category is linked to specific requirements, ranging from strict prohibitions to voluntary standards.

1. Unacceptable risk: Prohibited AI (Art. 5 AI Act)

AI systems considered a clear threat to safety, fundamental rights, or democratic values are strictly prohibited in the EU. These include systems that: perform biometric categorisation to infer sensitive data, the indiscriminate scraping of facial images from the internet or from CCTV footage (with certain exceptions), systems for emotion recognition in the workplace and in educational institutions, social scoring, or behavioural manipulation.

However, Member States may allow the use of real-time remote biometric identification systems by law enforcement for the purposes of criminal prosecution in publicly accessible spaces.

2. High-Risk AI: Compliance Requirements (Art. 6 et seq. AI Act)

These systems involve significant risks, for example to the fundamental rights of natural persons.

These include AI systems that are used as safety components and are subject to the regulations listed in Annex I of the AI Act (e.g. the Directive on the safety of toys or lifts) or are themselves considered such a regulated product, are listed in Annex III of the AI Act, in particular with regard to biometric applications, critical infrastructure, education and training, employment, human resources management, access to and use of essential private and public services (in particular AI systems for credit scoring that go beyond the detection of financial fraud), law enforcement, migration, asylum, border control, the administration of justice and democratic processes.

For further details, see Annexes I and III.

3. Limited Risk: Information and Transparency (Art. 52 AI Act)

These are AI systems that interact with natural persons (e.g., chatbots, voicebots) or generate or manipulated content for them (e.g., deepfakes, synthetic audio, images, video or text). Transparency requirements include, for example, informing individuals when content has been generated by AI. This is particularly important in the fight against deepfakes.

4. Minimal Risk: Voluntary Standards

Most AI systems fall under minimal risk and are not subject to mandatory requirements. Examples include such as AI-enabled video games, spam filters, or productivity tools.

Providers may apply voluntary codes of conduct to promote ethical and trustworthy AI beyond compliance.

General-Purpose AI (GPAI) Models

General-purpose AI models (Art. 3(63) AI Act) are models with a broad range of potential uses and can competently perform a wide spectrum of different tasks.

Their applications range from generating videos, text, and images, to other multimodal uses. Before such models may be placed on the market, extensive transparency obligations must be met. These include technical information, cybersecurity and safety requirements, policies to protect intellectual property, as well as information about training data and testing procedures.

Separately, the AI Act introduces a specific category called “GPAI models with systemic risk” (Art. 51 and 55), which is different from high-risk AI systems. These GPAI models with systemic risk, typically extremely large models exceeding certain compute thresholds, must meet additional obligations such as model evaluations, adversarial testing, and enhanced cybersecurity.

Extra-territorial Scope and AI Act Representative

Providers of GPAI models with systemic risk that do not have an establishment within the European Union but that make their models available or put them into service in the EU must appoint an authorised legal representative based in the EU (Art. 54).

This requirement reflects the extraterritorial scope of the AI Act, similar to the GDPR, meaning it applies not only to EU-based companies but also to foreign providers whose AI systems have an impact within the EU market. Making a model available in the EU, whether through online access, API integration, or distribution via third parties, can trigger this obligation, regardless of the provider’s physical location.

Unlike the GDPR representative under Art. 27 GDPR, the AI Act authorised representative has obligations that resemble product-compliance and product-liability functions. In addition to serving as the provider’s legally accessible point of contact in the EU, the authorised representative must verify compliance with key obligations under Art. 53 and Art. 55, keep technical documentation, handle requests from supervisory authorities and cooperate with them and support enforcement actions.

Their responsibilities representative extend to assisting with communications, ensuring ongoing adherence to the provider’ obligations, and cooperating fully with regulators. This requirement applies to organisations of any size, including developers, deployers, and third-country providers whose AI systems are placed on the EU market or whose outputs are used within the EU.

The AI Act representative arguably has a more substantive role than the GDPR representative in the oversight of compliance with the AI Act,

Key compliance requirements and timelines

Achieving compliance with the AI Act is not a one-time exercise but requires a structured, multi-phase approach across the AI lifecycle. The following roadmap outlines five essential steps organisations should take to assess applicability, classify AI systems, and implement the required governance, processes, and documentation in time.

Step 1: Determine whether your system qualifies as AI under the AI Act

The AI Act applies only to systems meeting the regulatory definition of “AI”, aligned with the OECD AI Principles. Before any compliance work begins, organisations must identify which

technologies in their organisation qualify as AI under the Act.

Step 2: Assess whether the AI Act applies to your use case

Once a system is identified as AI, assess whether its purpose falls within the Act’s scope. Some use cases are exempt from the Regulation.

The AI Act does not apply to AI:

- Used solely for military, defence or national security purposes

- Developed and deployed exclusively for scientific research and development

- Used for research, testing or development prior to market placement (

—unless tested under real-world conditions)

- Used by natural persons for purely personal and non-professional purposes

- Made available under free and open-source licences

Practical guidance: Each exemption must be assessed carefully on a case-by-case basis. Carefully document the legal basis for any claimed exemption.

Step 3: Determine your role in the AI supply chain

Compliance duties vary depending on the role an organisation takes. However, obligations are primarily aimed at the provider of AI solutions. This is anyone who develops AI or has it developed and places it on the market or puts it into operation under their own name. In order to avoid a gap in legal protection, other market participants can also be subject to the same obligations. The legal framework of the AI Act is therefore relevant for the entire supply chain - from the manufacturer to the end user.

Step 4: Classify the AI System and identify applicable obligations

The level of compliance required depends on the classification of the AI system.

Prohibited and High-Risk AI Systems

Prohibited AI Systems, as the name suggests, cannot be placed on the market or used in the EU.

High-Risk AI Systems can only be placed on the market and used in the EU if specific mandatory obligations are met including:

- Risk management system and AI risk assessment

- Fundamental rights impact assessment (Annex III systems)

- Data governance and data quality controls

- Technical documentation (for CE compliance)

- Record-keeping and logging

- Transparency obligations

- Human oversight measures

- Accuracy, robustness and cybersecurity requirements

- Appointment of an Authorised Representative established in the EU for non-EU providers.

Providers must also issue an EU Declaration of Conformity and affix CE markings before placing the system on the market.

Compliance efforts should prioritise high-risk AI systems, as this category entails the most extensive requirements.

General-Purpose AI (GPAI )

All GPAI providers must adequately document the system development and training content and also provide appropriate information to downstream providers so that they can understand the system. This includes: Information on the functions and limitations of the GPAI model Implementation of an EU-copyright compliance strategy Summaries of the content used for the training Providers of GPAI with systemic risks must also carry out a model assessment of possible risks, meet certain reporting obligations and ensure cybersecurity measures.

Limited-risk AI

Subject to transparency obligations (e.g., disclosure of AI-generated content, chatbot interactions, labelling of deepfakes).

Minimal-risk AI

No mandatory obligations, but voluntary codes of conduct encouraged.

Step 5: Implement compliance obligations on time

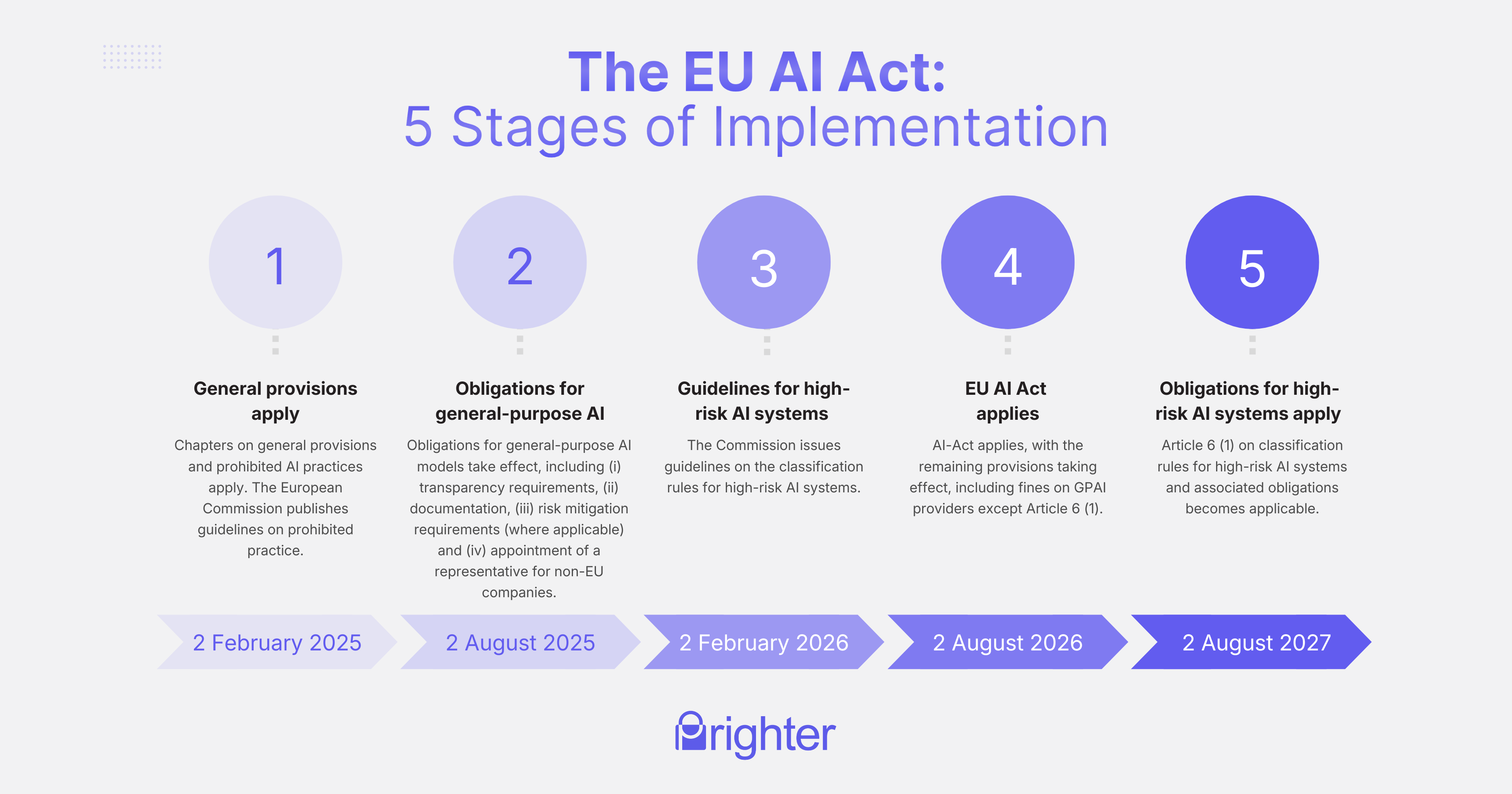

Organisations must plan for timely implementation, as compliance deadlines differ per AI category. Here's an overview:

A structured roadmap ensures readiness for mandatory requirements, including governance, documentation, risk controls and technical adaptation.

The EU AI Act introduces a phased implementation approach to regulate AI technologies based on their level of risk, aiming to balance innovation, safety, and fundamental rights.

While the imminent deadline applies to GPAI, providers should note the previous and future deadlines and be ready to comply as follows:

- 2 February 2025: Chapters on general provisions and prohibited AI practices apply. The Euro

- 2 August 2025: Obligations for general-purpose AI models (including GPAI and foundational models like LLMs) will take effect. These include transparency requirements, documentation, and potentially risk-mitigation obligations depending on the capabilities and scale of the model

- 2 February 2026: The Commission to issue guidelines on the classification rules for high-risk AI systems.

- 2 August 2026: AI-Act applies, with the remaining provisions taking effect, including fines on GPAI providers except Article 6 (1).

- 2 August 2027: Article 6 (1) on classification rules for high-risk AI systems and associated obligations becomes applicable.

AI Act 2.0 at a glance: The Digital Omnibus on AI Regulation Proposal

As organisations begin preparing for the AI Act’s phased compliance deadlines, the European Commission has introduced a “Digital Omnibus” package proposing targeted adjustments to the Act, —often referred to as AI Act 2.0. The aim is to ease implementation, reduce administrative burden, and strengthen competitiveness while maintaining high standards. The proposal responds to practical challenges identified by stakeholders, including slow designation of competent authorities, limited availability of harmonised standards, and the need for clearer compliance tools.

A central element of the proposal is the adjustment of compliance deadlines:

- For Annex III high-risk AI systems, the current application date of 2 August 2026 would be pushed back. The new deadline would depend on the Commission’s confirmation that the necessary compliance support measures, such as standards and guidance, are available. Once confirmed, providers and deployers would have six months to comply, with a hard backstop of 2 December 2027.

- For Annex I high-risk systems, the rules would apply one year after support measures are deemed available, and no later than 2 August 2028.

- Other proposed elements include the removal of registration requirements for Annex III systems that are assessed not to be high-risk, while maintaining internal documentation responsibilities, relocation of the AI literacy obligations, or following a broader use of AI regulatory sandboxes and real-world testing, alongside expanded simplifications for SMEs and small mid-caps.

The proposal will now move through the EU legislative process, with European Parliament committees (IMCO, ITRE, LIBE) expected to take leading roles. A final text could be adopted as early as Q1 2026 under the urgent procedure, though mid-2026 to Q3 2026 is a more realistic timeline. Stakeholders may still influence the process, with consultations open until 11 March 2026.

The EU AI Act marks a pivotal shift in how artificial intelligence can be developed, deployed, and governed in Europe. Its risk-based regulatory framework sets a global benchmark for responsible AI, ensuring that transparency, safety, human oversight, and fundamental rights remain at the core of innovation.

Prighter’s Perspective

For organisations, the Act requires proactive planning and forward-looking planning. Early mapping of AI systems, clear role identification within the AI supply chain, and a structured implementation roadmap are essential to avoid costly remediation later.

The European Commission’s new Digital Omnibus proposal underlines this need for preparedness. While the proposal aims to ease implementation by extending certain deadlines and clarifying compliance obligations, it does not reduce the substantive requirements for high-risk AI systems. Instead, it offers a short window for organisations to strengthen governance structures, documentation, and technical controls before enforcement intensifies.

Prighter provides EU representation and compliance support for AI providers, helping organisations prepare for the AI Act’s obligations through structured implementation and monitoring frameworks.

As the regulatory landscape evolves, particularly with the Commission’s Digital Omnibus proposal, Prighter closely monitors legislative developments and updates organisations on the practical implementations for their compliance strategies.

Building on our extensive experience with regimes such as the GDPR, and a specialised team of legal professionals, Prighter supports companies in adapting to new requirements, ensuring readiness, accountability, and a smooth market entry into the EU.

If you would like support in maintaining compliance under the EU AI Act, and a range of other global privacy and digital governance frameworks, book a free consultation with our team today.