The EU AI Act Countdown is (Back) on for General-Purpose AI Model Providers

What GPA providers need to do to comply with the EU AI Act in 2025 and beyond.

A coalition of top executives from leading European companies—including Airbus, ASML, Lufthansa, Mistral, and TotalEnergies—have signed an open letter urging the European Commission to pause the implementation of the EU Artificial Intelligence Act. The EU AI Champions Initiative is requesting a two-year "clock-stop" to allow time for clearer guidelines and simplification, arguing that current regulations are overly complex and disruptive to innovation and business operations.

Despite this high-profile appeal, the European Commission confirmed at a press conference on 4 July 2025 that it will not delay the implementation deadlines of the EU AI Act. A Commission spokesperson stated:

We have legal deadlines established in a legal text. The provisions kicked in February, general purpose AI model obligations will begin in August, and next year, we have the obligations for high-risk models that will kick in in August 2026.

Critically, this means the legal timeline remains fixed, and companies must be ready to comply with:

- Obligations for general-purpose AI models from August 2025

- Rules for high-risk AI systems from August 2026.

With less than a month to go before the obligations around general-purpose AI models (“GPAI”) come into force, we look here at what general-purpose AI is and what providers of such GPAI will be required to do.

What is general-purpose artificial intelligence

General-purpose AI (“GPAI”) refers to “AI models that display significant generality, are capable to competently perform a wide range of distinct tasks and that can be integrated into a variety of downstream systems or applications”.

Essentially, GPAI is a type of Artificial Intelligence that possesses the ability to understand, learn, and apply knowledge across a wide range of tasks at a level comparable to human cognitive abilities. Examples might include personal assistants, healthcare diagnostics and AI generated artistic works. These models differ from narrow AI, which is trained on structured datasets to handle specific tasks, such as image and speech recognition systems.

GPAI models are flexible, adaptable, and capable of learning or performing new tasks without explicit programming. Their strength lies in their broad applicability and capacity to serve as core components in various AI systems.

It is important to note that an AI model, including a GPAI model, is not itself an AI system, but rather a foundational technology that powers downstream applications. For example, a GPAI model might be embedded in a customer service chatbot, legal assistant, or creative writing tool, but the model alone is not the complete AI system.

The best-known examples of GPAI models include Large language models (LMMs) like OpenAI’s GPT-4.5, Google’s Gemini, or Anthropic’s Claude. These models can engage in conversation, write code, summarise research, compose stories, and assist with decision-making. While not conscious or fully autonomous, they already span a wide variety of applications, making them early forms of general-purpose AI.

Obligations on GPAI providers under the EU AI Act

Chapter V of the EU AI Act sets out specific obligations for providers of GPAI models including:

Documentation and Transparency

GPAI model providers must prepare detailed technical documentation (e.g. training data, validation methods, computational resources, and known or estimated energy consumption) and make a public summary of the training content available. They must also help downstream users understand the model’s capabilities and limitations. Open-source GPAI models that do not pose systemic risks are exempt from these detailed documentation requirements.

Compliance with EU copyright law

Providers are required to implement policies to comply with EU law on copyright and related rights, including through state-of-the-art technologies. This includes identifying and respecting reservations of rights expressed by content creators.

Systemic Risk Management

If a GPAI model is considered to pose systemic risks, providers have additional obligations, including cybersecurity measures, model evaluations (such as red teaming), risk assessments, and incident reporting.

Cooperation with relevant AI authorities

GPAI providers are required to cooperate with the European Commission and relevant national authorities, providing necessary information and ensuring the ability for such authorities to regulate the use of AI within the European Union.

Extra-territorial Scope and AI Act Representative

Providers of high-risk GPAI models that do not have an establishment within the European Union but that make their models available or put them into service in the EU must appoint an authorised legal representative based in the EU.

This requirement reflects the extraterritorial scope of the AI Act — similar to the GDPR — meaning it applies not only to EU-based companies but also to foreign providers whose AI systems have an impact within the EU market. Making a model available in the EU, whether through online access, API integration, or distribution via third parties, can trigger this obligation, regardless of the provider’s physical location.

The representative serves as the primary point of contact assisting providers with communications with the EU national supervisory authorities and plays a crucial role in ensuring that the provider complies with the AI Act’s obligations. The representatives’ responsibilities include handling documentation requests and cooperating with relevant authorities. This requirement applies regardless of company size and includes developers, deployers, and third-country suppliers whose AI systems reach the EU.

Implementation steps and timeline

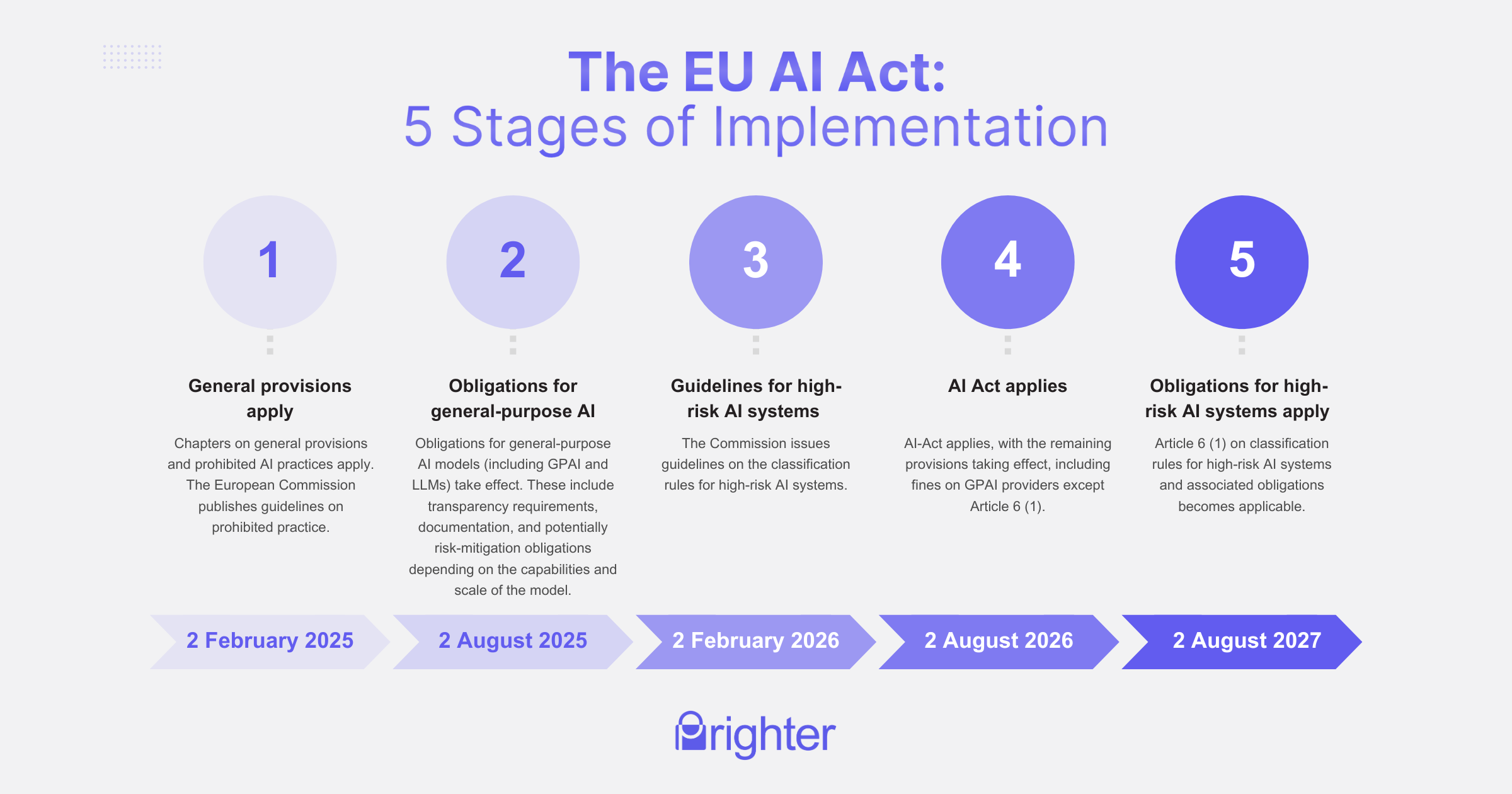

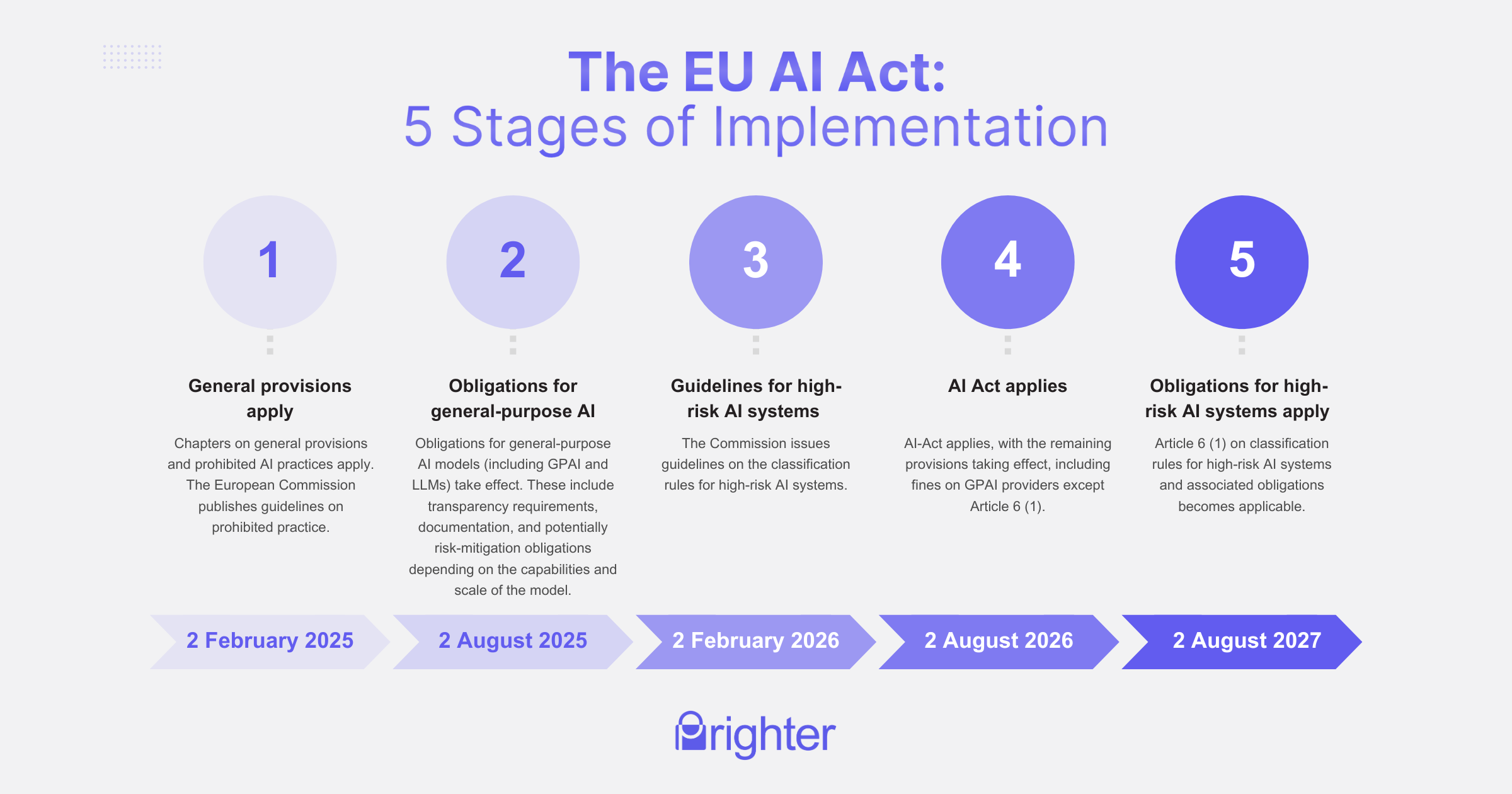

The EU AI Act introduces a phased implementation approach to regulate AI technologies based on their level of risk, aiming to balance innovation, safety, and fundamental rights. While the imminent deadline applies to GPAI, providers should note the previous and future deadlines and be ready to comply as follows:

- 2 February 2025: Chapters on general provisions and prohibited AI practices apply. The European Commission publishes guidelines on prohibited practice.

- 2 August 2025: Obligations for general-purpose AI models (including GPAI and foundational models like LLMs) will take effect. These include transparency requirements, documentation, and potentially risk-mitigation obligations depending on the capabilities and scale of the model.

- 2 February 2026: The Commission to issue guidelines on the classification rules for high-risk AI systems.

- 2 August 2026: AI-Act applies, with the remaining provisions taking effect, including fines on GPAI providers except Article 6 (1).

- 2 August 2027: Article 6 (1) on classification rules for high-risk AI systems and associated obligations becomes applicable.

The EU AI Act Remains a Top Priority for Regulators. Is Your Team Prepared?

The European Commission's firm stance sends a clear message: AI regulation remains a top priority, even under pressure from business and competition. While industry leaders warn that regulatory complexity could slow down innovation and investment, the EU seems determined to maintain its role as a global standard-setter in AI regulation.

Companies operating in the EU—or relying on AI models that serve European markets—should prepare for full compliance with the AI Act’s provisions. There will be no delay. Instead, the focus now shifts to implementation guidelines, standardisation efforts, and collaboration between regulators, developers, and industry.

Prighter continues to closely monitor developments related to the AI Act and its upcoming obligations, offering strategic insight and guidance for businesses navigating this evolving legal landscape.

If you’d like support in managing your obligations under the EU AI Act, book a free consultation with Prighter today, and discover how our EU AI Authorised Representative Services could benefit your team.